Artificial intelligence (AI) has rapidly moved from a futuristic concept to an everyday reality, quietly reshaping work, education, and even personal interactions. In just three years since the release of ChatGPT, AI systems are no longer just experimental tools—they’ve become fundamental infrastructure, woven into daily operations across industries and institutions. This shift is marked by both opportunities and growing concerns.

The Pervasiveness of AI

The integration of AI is happening at multiple levels. In education, teachers are using AI for tasks like grading, while students exploit the technology for harmful activities, such as creating non-consensual deepfakes. Businesses, including a Swedish payments company, have adopted AI to streamline operations, sometimes to the point of over-automation, leading to re-evaluations of human labor needs.

The sheer scale of AI investment is staggering; Gartner estimates spending reached $1.8 trillion last year. However, this expansion comes at an environmental cost: AI data centers consume massive amounts of energy, with single facilities rivaling the power usage of 100,000 homes—and even larger centers are in development.

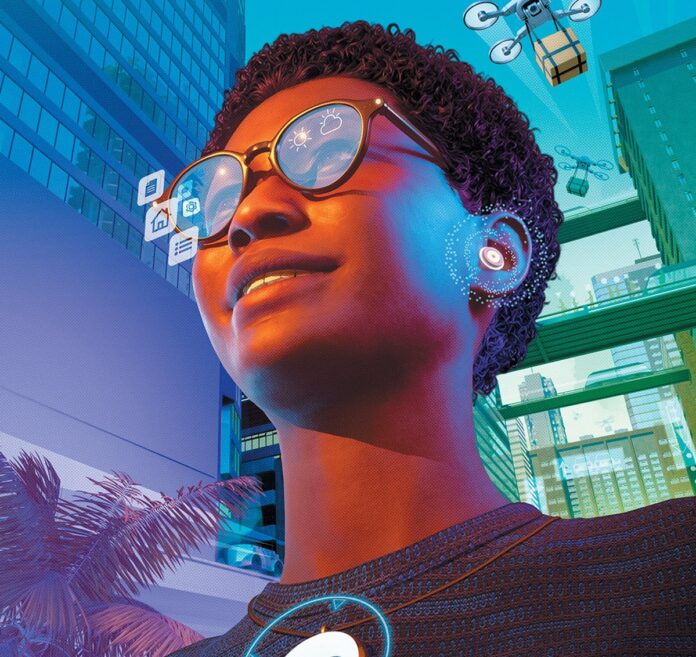

Human + Machine: The New Reality

The narrative of “human versus machine” is outdated. The current landscape is one of collaboration under real-world constraints, driven by imperfect data and flawed institutions. AI isn’t replacing humans entirely but augmenting them, sometimes for better (clinicians using AI to reduce administrative burdens), sometimes for worse (the spread of misinformation via deepfakes).

This partnership shifts liability, with AI “copilots” often blurring the lines between assistance and control. The speed offered by these systems comes at the cost of critical decision-making, forcing users to constantly assess whether to trust the technology’s suggestions.

Scaling Harms and Unaccountability

The most significant risk isn’t AI surpassing human intelligence, but rather the speed at which harms can scale. Deepfakes can ruin reputations before verification is possible, and even benign AI errors (such as hallucinated facts) can have serious consequences in professional settings like healthcare.

The ease with which AI’s benefits are touted versus its downsides being dismissed creates a dangerous imbalance. The question remains: who bears the responsibility when these systems fail? The technology’s efficiency makes accountability harder to establish, leaving individuals and institutions vulnerable to unintended consequences.

AI is no longer a future possibility; it’s a present reality that demands careful consideration of its societal, ethical, and environmental implications. As the technology continues to evolve, the challenge lies in managing its power responsibly—before the harms outpace the benefits.